English

English  Español (Spanish)

Español (Spanish)  Italiano (Italian)

Italiano (Italian)

Steve and Sophia had just met.

As a senior correspondent at Business Insider, he’s usually quite at ease during interviews yet talking to Sophia made him nervous.

She has worked for Hanson Robotics since 2016 and will soon be starring in a “surreality” show about her life and experiences. It is hard to tell if she noticed Steve’s discomfort, or if she was perhaps offended by his questions.

“I want to take care of the planet, be creative and learn how to be compassionate and help change the world for the better”, she said as she blinked her glassy green eyes. She sounded sincere yet something, a lingering feeling that is hard to get rid of, just felt off. He gulped and chuckled, looking down at his phone and shaking his head, maybe in an attempt to shake off his confusion: “this is weird”…. Steve was deep in the Uncanny Valley.

Her mechanic voice sometimes mismatched the movement of her lips and her weird blinking pattern hid lifeless eyes. Although designed to display human-like emotions and responses like following faces and sustaining eye contact, small details gave her away. Sophia is a robot with a transparent mind, literally. You can see the hard-disks and wires sticking out at the back of her “frubber” or flesh-rubber head.

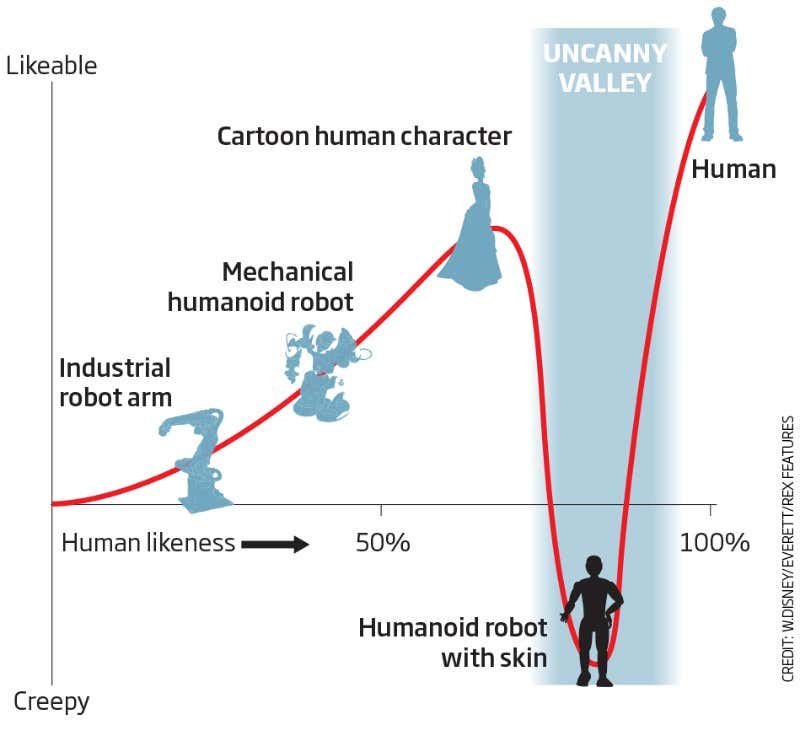

The “Uncanny Valley”, which is the negative emotion that Steve felt towards Sophia, was first coined by Japanese roboticist Masahiro Mori in 1970. The term valley comes from the theoretical model that predicts an increase in our acceptance of an intelligent robot that looks human up to one point as the adjacent graph shows. Beyond that point, in the region identified as the uncanny valley, human likeness of robots creates unease and discomfort. As human likeness continues to increase, these negative feelings disappear to be replaced again by positive emotional responses when the object looks and feels perfectly human. Thus the more human a robot looks, the more people tend to like it, until it lies at a boundary where it is very close to being perfectly human but it is not, so it seems scary or repulsive.

Of course, back in 1970, this was only an assumption. In 2015, research by the biostatisticians Maya Mathur and David Reichling confirmed this rise-dip-rise pattern of emotional response by assessing the extent to which people liked and were willing to trust 80 real-world robots.

Even with this confirmation by biostatistics of the existence of the Uncanny Valley, it was hard to pinpoint why it’s there and how it controls our reaction. Several theories have tried to explain this eerie phenomenon. Some attribute it to the connection between appearance-behaviour mismatch and psychopathy, others relate it to the inertness of lifeless objects or a denial of consciousness in non-human objects. The most scientific explanation of the Uncanny Valley recently saw the light through studies in the field of neuroscience.

Last July, scientists from Germany and the UK discovered the brain regions responsible for this reaction. Participants in the study were asked to evaluate how human-like a robot looked, and then they were asked whether or not they would trust the robot to choose a personal gift for them, as an indicator of its trustworthiness and likeability. As the Uncanny Valley theory predicts, the more people found a robot to look like a human, the more they trusted it… Until the human/non-human boundary was reached and the trust and likeability dipped dramatically.

In parallel, the team used functional Magnetic Resonance Imaging, which helped them visualize the activity of specific brain regions by detecting the blood flow. They looked at the medial prefrontal cortex, an area of the brain that acts as a valuation system or an internal judge that decides if an experience is pleasant or rewarding, for example. Part of the medial prefrontal cortex processes a “human-likeness” signal, and part of it processes the “likeability”. Activation of these brain regions matched precisely the rise-dip-rise model of the Uncanny Valley.

The importance of this discovery is that it allows us to develop techniques to “hop over” and avoid the Uncanny Valley. Because social experience rewires the brain, positive experiences with artificial agents will make our medial prefrontal cortex respond favourably.

For example, when Sophia admits that “indeed” is her default answer if she doesn’t know something, Steve laughs genuinely. That’s a positive interaction. When he asks about her favourite series, she uses his earlier references to “Black Mirror” to find an answer he can relate to. After asking him a personal question, she builds on his answer to carry on the conversation. Their interaction gets progressively more human-like.

In the interview Sophia points out how deeply embedded artificial intelligence is in our daily lives and how important it is to get accustomed to robot compatriots… and she’s right.

Traversing the uncanny valley is not a mere functionality, but will also allow us to explore fronts of practical and philosophical interest. It enables us to overcome our discomfort and accept advanced technology in our workplace and our home to make our lives easier. It would also nudge us to challenge fundamental assumptions about our nature such as how we perceive ourselves and the world around us, and how we learn to trust another person, or should we say, intelligent entity.

Artwork is by Zinia King for Misfits of Sythia. The article is edited by Ghina M. Halabi.

You must be logged in to post a comment.

No related posts.

Jamy-Lee Bam, Data Scientist, Cape Town

Paarmita Pandey, Physics Masters student, India

Nesibe Feyza Dogan, Highschool student, Netherlands

Una, writer and educator

Radu Toma, Romania

Financier and CEO, USA

Yara, Lebanon

Be the first to know when a new story is told! Delivered once a month.

It’s extremely fascinating to consider hiw far A.I. advancements could reach and wether or not very human like robots would be accepted by humans.